Physical systems have particles with properties, locations, times, motions, energies, momenta, and relations. Particles can be independent or depend on each other.

degrees of freedom

Related particles have motion restrictions. Particles with no relations are free to move in all directions by translations, vibrations, and rotations. Systems have interchangeable states {degrees of freedom, entropy}. More particles and more particle independence increase degrees of freedom.

order

Order depends on direction constraints. Ordered systems have few possible states. Disordered systems have many possible states. Systems with high heat have more disorder because kinetic energy goes in random directions and potential energy decreases. Systems with work have less disorder because kinetic energy goes in one direction and potential energy increases in direction against field. Systems have disorder amount {entropy, heat}|.

information

Systems with no relations have no information, because particles move freely and randomly, with no dependencies. Systems with relations have information about relations and dependencies. Systems with more degrees of freedom, less order, and more entropy have less information. Systems with fewer degrees of freedom, more order, and less entropy have more information. Because entropy relates to disorder, entropy relates to negative information.

information: amount

The smallest information amount (bit) specifies binary choice: marked or unmarked, yes or no, 0 or 1, or on or off. The smallest system has two possible independent states and one binary choice: 2^1 = 2, where 2 is number of states and 1 is number of choices. Choices can always reduce to binary choices, so base can always be two. Systems have number of binary choices, which is bit number.

information: probability

The smallest system is in one state or the other, and both states are equally probable, so states have probability one-half: 1/2 = 1 / 2^1. State probability is independent-state-number inverse.

information: states

Systems have independent states and dependent states. Dependent states are part of independent states. Systems can only be in one independent state. Particles have free movement, so independent states can interchange freely and are equally probable. Particles have number {degrees of freedom, particle} of independent states available. Systems have number of states. Number is two raised to power. For example, systems can have 2^6 = 64 states. States have probability 1/64 = 1 / 2^6. 6 is number of system information bits. Systems with more states have more bits and lower-probability states.

information: degeneracy

Different degenerate states can have same properties. For example, systems with two particles can have particle energies 0 and 2, 2 and 0, or 1 and 1, all with same total energy.

information: reversibility

Particle physical processes are reversible in time and space. Physical system states can go to other system states, with enough time.

entropy: probability

Disorder depends on information, so entropy depends on information. Entropy is negative base-2 logarithm of probability. For example, for two states, S = -log(1 / 2^1) = +1. For 64 states, S = -log(1 / 2^6), so S = -log(1 / 2^6) = +6. More states make each state less likely, so disorder and entropy increase.

entropy: degeneracy probability

Degenerate-state groups have different probabilities, because groups have different numbers of degenerate states. Groups with more members have higher probability because independent states have equal probability. Entropy depends degeneracy pattern. Going to lower probability group increases system order and has less entropy. Going to higher probability group decreases system order and has more entropy.

Lowest-probability groups are reachable from only one other state. High-probability groups are reachable from most other states. Systems are likely to go to higher-probability groups. Systems move toward highest-probability group. In isolated closed systems, highest-probability group has probability much higher than other groups. If system goes to lower-probability group, it almost instantly goes to higher-probability group, before people can observe entropy decrease. Therefore, entropy tends to increase.

entropy: additive

Entropy and disorder are additive, because they depend on independent states, degrees of freedom. Systems with independent parts have entropy equal to sum of part entropies. If parts are dependent, entropy is less, because number of different states is less.

entropy: heat

Heat is total random translational kinetic energy. Temperature is average random translational kinetic energy. Entropy S is heat energy Q, unavailable to do work, divided by temperature T: S = number of independent particle states = (total random translational kinetic energy) / (average random translational kinetic energy) = Q/T. Kinetic energy is random, and potential energy holds molecules apart in all directions, so heat has no net direction. Average direction is zero.

entropy: energy

At constant pressure and temperature, entropy is enthalpy change divided by temperature, because heat is enthalpy change at constant pressure and temperature. At constant volume and temperature, entropy is energy change divided by temperature, because heat is energy change at constant volume and temperature.

entropy: gravity

If no gravity, entropy increases as particles spread, because particle occupied volume increases. If gravity, entropy increases as particles decrease separation, because potential energy becomes heat though particle occupied volume decreases. If antigravity, entropy increases as particles increase separation, because potential energy becomes heat and particle occupied volume increases.

entropy: mass

Entropy increases when particle number increases. Matter increase makes more entropy. Entropy increases when particles distribute more evenly, toward thermal equilibrium, and have fewer patterns, lines, edges, angles, shapes, and groupings.

entropy: volume

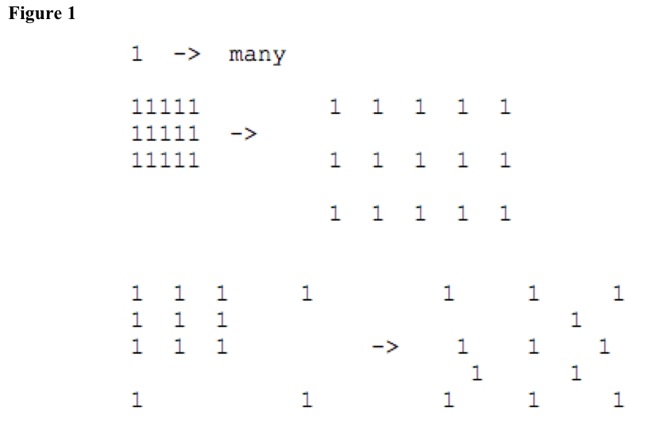

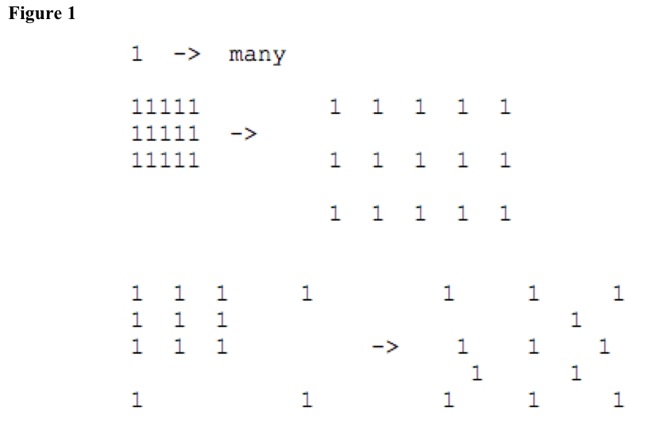

If there are no forces, volume increase makes more possible molecule distributions, less order, and more entropy. If there are forces, volume decrease makes more possible molecule distributions, less order, and more entropy. See Figure 1.

entropy: directions

Energy dispersal increases entropy, because disorder increases. Increasing number of directions or motion types increases entropy. Mixing makes more disorder and more entropy. More randomness makes more entropy. More asymmetry makes more disorder and more entropy.

entropy: heat

Work makes more friction and heat and more entropy. Making heat makes more randomness and more entropy.

entropy: fields

Field strength decrease disperses energy and makes more entropy.

entropy: force and pressure

Pressure decrease disperses energy and makes more entropy. Force decrease disperses energy and makes more entropy.

entropy: volume

At phase changes, pressure change dP divided by temperature change dT equals entropy change dS divided by volume change dV, because energy changes must be equal at equilibrium: dP / dT = dS / dV, so dP * dV = dT * dS. Volume increase greatly increases entropy.

entropy: increase

Systems increase entropy when disorder, degrees of freedom, and disinformation increase. Information decrease makes more interactions and more entropy. Order decrease, as in state change from liquid to gas, increases entropy.

entropy: decrease

Many factors increase order, regularity, or information, such as more regular space or time intervals, as in stripes and waves. Higher energy concentration, more mass, larger size, higher density, more interactions, more relations, smaller distances, closer interactions, more equilibrium, more steady state, more interaction templates, more directed energy, and more filtering increase order.

More reference point changes, more efficient coding or language, better categorization or classification, more repetition, more shape regularity, and more self-similarity at different distance or time scales increase order. More recursion, bigger algorithms, more processes, more geodesic paths, more simplicity, lower mixing, and more purity increase order. More reconfigurations, more object exchanges, and more combining systems increase order. Fewer functions, fewer behaviors, more resonance, fewer observations, more symmetry, more coordinated motion, and more process coupling increase order.

Larger increase in potential energy increases order, because energy concentrates. Higher increase in fields increases order, because energy increases. Fewer motion degrees of freedom, as in slow and large objects, increase order. More same-type, same-range, and same-size interactions increase order. More and equal influence spheres increase order. Higher space-time curvature increases order. More constant space-time curvature increases order.

Lower harmonics of Fourier series increase order. Fewer elements in Fourier series increase order.

entropy: closed system

In closed systems, entropy tends to increase, because energy becomes more random. Potential energy becomes random kinetic energy by friction or forced motion. Random kinetic energy cannot all go back to potential energy because potential energy has direction. Work kinetic energy becomes random kinetic energy by friction or forced motion. Random kinetic energy cannot all go back to work energy because work energy has direction. Heat energy is already random. Only part, in a direction, can become potential energy or work kinetic energy. Radiation becomes random kinetic energy by collision. Random kinetic energy cannot all go back to radiation energy, because radiation energy requires particles accelerated in direction.

universe entropy

Universe is isolated closed system. It started in low-entropy state and moves to higher entropy states.

Perhaps, at beginning {hot big bang} {primordial fireball}, universe had one particle at one point with smallest possible volume, and so no relations among parts. There were no space fields and no tidal effects. Universe had highly concentrated energy at high temperature and so large contracting forces and high pressure. Particle number remained the same or increased, as particles and radiation split.

Universe expansion increased space volume. Space points became farther from other points. Expansion was greatest at first. Then expansion slowed, because all particles had gravity.

As universe cooled, it created particles, in evenly distributed gas. Entropy increased but was still low.

As universe cooled, gas-particle gravity formed galaxies and stars. Condensed gas had higher entropy but was still low. Potential energy converted to heat as infalling particles collided. Heat and mass concentrated in stars.

Stars are hot compared to space, so stars can transfer energy to planets and organisms. Stars undergoing nuclear fusion make visible light. Visible light has higher energy than heat infrared radiation. On Earth, temperature stays approximately constant. Therefore, visible light energy that impinges on Earth is equal to energy that radiates away from Earth as heat. Because sunlight has higher energy per photon, fewer sunlight photons land on Earth than Earth emits as infrared heat photons. Entropy increases in space, and total universe entropy increases. On Earth, order increases and disorder decreases, mostly in organisms. From universe beginning until now, universe entropy increases, while small-region physical forces and particle motions can cause entropy decreases.

Now, universe has many photons, large volume, negligible forces, and even matter distribution, so universe entropy is now large. For example, cosmic microwave background radiation has many randomly moving photons, from soon after universe origin. Photons mostly evenly distribute. They fill whole universe and have little effect on each other. As universe expands, their entropy becomes more.

Now, universe has many galaxies with central black holes and has black holes formed after supernovae. Black holes are mass concentrations denser than atomic nuclei. Black holes have very high entropy, because particle number is high, volume is small, mass evenly distributes, gravitational force and fields are high, and density is high. Black holes make universe entropy large now.

In the future, universe entropy will increase. Universe will have more black holes and can evolve to have only black holes. Universe will have more local forces. Universe will have more volume. Universe will have more particles. Universe will have more-even particle distribution.

Physical Sciences>Physics>Heat>Entropy

Outline of Knowledge Database Home Page

Description of Outline of Knowledge Database

Date Modified: 2022.0224